|

I am a Ph.D. student in the Machine Learning Department, within the School of Computer Science at Carnegie Mellon University (CMU). I am advised by Jeff Schneider. Email / Google Scholar / Twitter / Github |

|

|

My current research focus is in methods for reasoning about uncertainty from data and their applications in decision making and control. I am interested in understanding why models make wrong predictions with high-confidence, and developing methods to utilize predictive uncertainty for better decision making. This span topics in uncertainty quantification, Bayesian machine learning, probabilistic learning and inference, decision making under uncertainty, and reinforcement learning. |

|

|

|

Developed by: Youngseog Chung, Willie Neiswanger, Ian Char, Han Guo Uncertainty Toolbox is a python toolbox for evaluating and visualizing predictive uncertainty quantification. It includes a suite of evaluation metrics (accuracy, average calibration, adversarial group calibration, sharpness, proper scoring rules), plots to visualize the confidence bands, prediction intervals, and calibration. recalibration function. It also includes a glossary and a list of relevant papers in uncertainty quantification. |

|

|

|

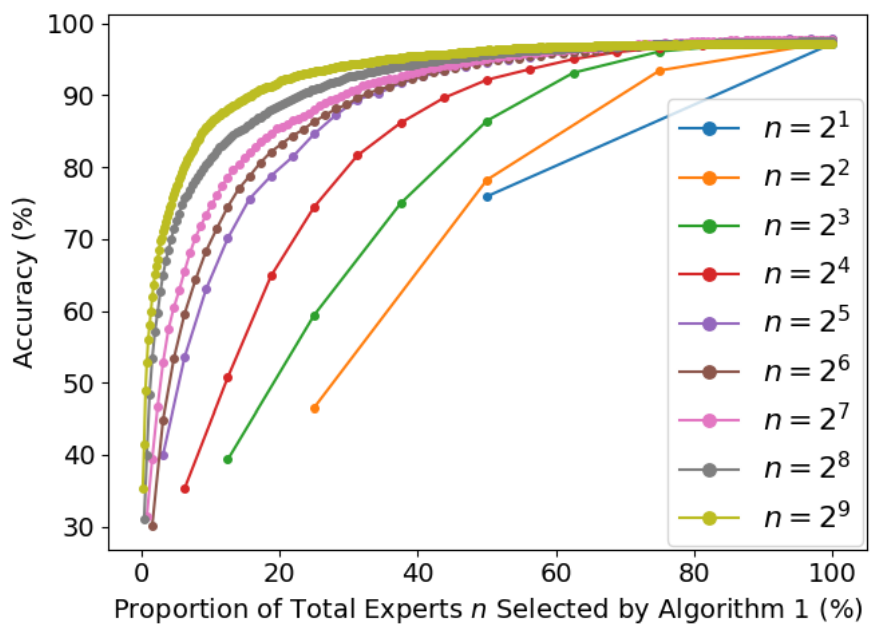

Youngseog Chung, Dhruv Malik, Jeff Schneider, Yuanzhi Li, Aarti Singh arXiv 2024 Is parameter count all that matters for representation power in Soft Mixtures of Experts (MoE)? How many experts should you use in a Soft MoE? What are the implications for expert specialization? How would one even define expert specialization for Soft MoE? We tackle these questions and more in our work on implicit biases within Soft MoE. |

|

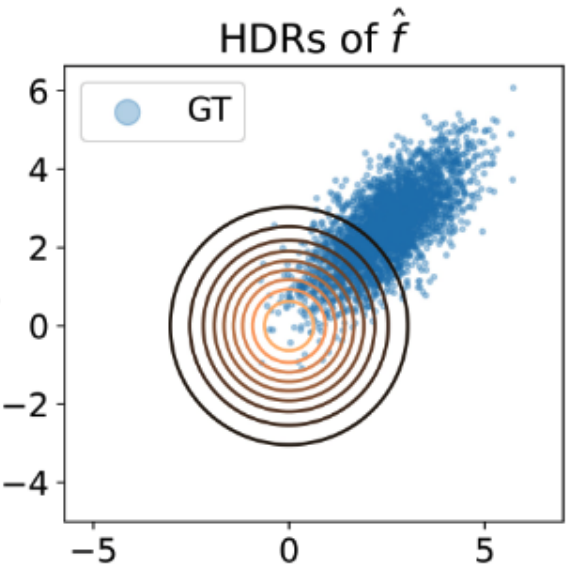

Youngseog Chung, Ian Char, Jeff Schneider, ICML 2024 How should one define calibration for multi-dimensional regression models? We define such a notion of calibration by leveraging the concept of highest density regions (HDR). Further, we propose a metric for multi-dimensional calibration and a recalibration algorithm to optimize for this calibration metric. |

|

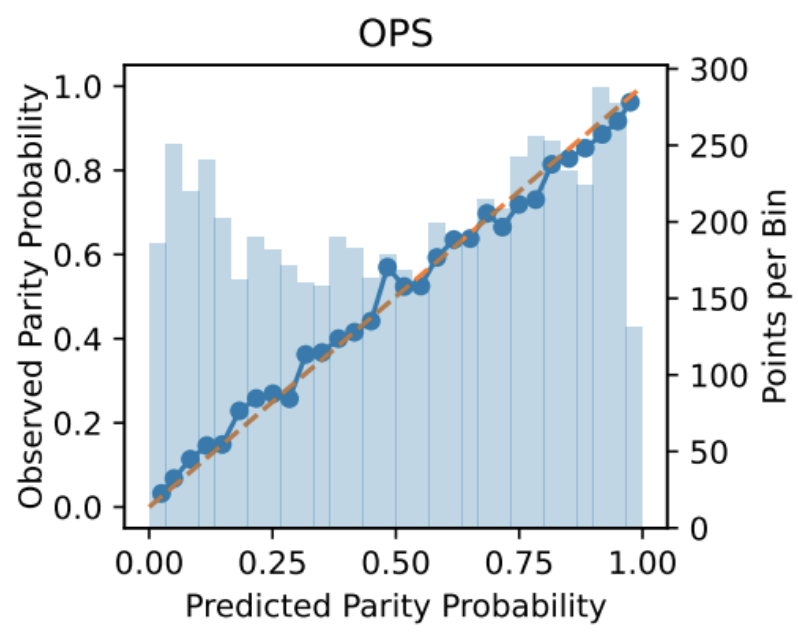

Youngseog Chung, Aaron Rumack, Chirag Gupta UAI 2023 (Oral) In a sequential prediction setting, we show that calibrated regression models do not produce calibrated predictions for increases/decreases in consecutive observations, i.e. they are not parity calibrated. We define the notion of parity calibration and propose an online recalibration algorithm to achieve parity calibration. |

|

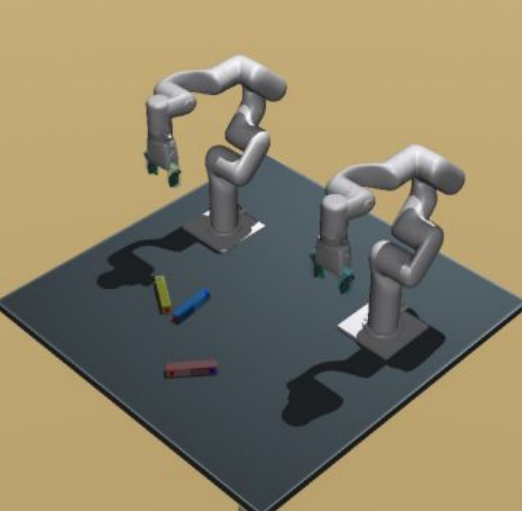

Satoshi Kataoka, Youngseog Chung, Seyed Kamyar Seyed Ghasemipour, Pannag Sanketi, Shixiang Shane Gu, Igor Mordatch arXiv 2023 Robotic manipulation with multi-arm platforms remains a challenging field, and we demonstrate a significant advancement by using deep reinforcement learning to effectively train dual-arm robots for complex manipulation tasks. By focusing on a novel U-Shape Magnetic Block Assembly Task without predefined controllers or demonstrations, we achieve substantial success in both simulated and real-world environments, indicating a promising direction for enhancing real-world robotic dexterity through Sim2Real transfer methods. |

|

Conor Igoe, Youngseog Chung, Ian Char, Jeff Schneider arXiv 2022 Previous methods utilizing test-time gradients for OOD detection have shown competitive performance, but there are misconceptions about the necessity of gradients. In this work, we provide an in-depth analysis of test-time gradients and propose a general, non-gradient-based method of OOD detection. |

|

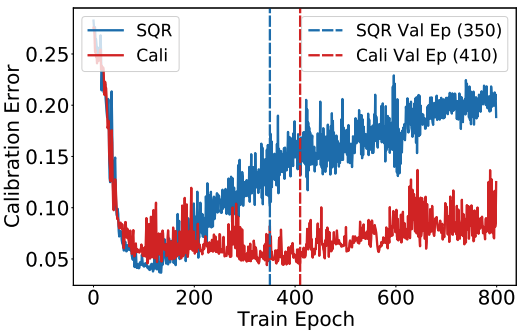

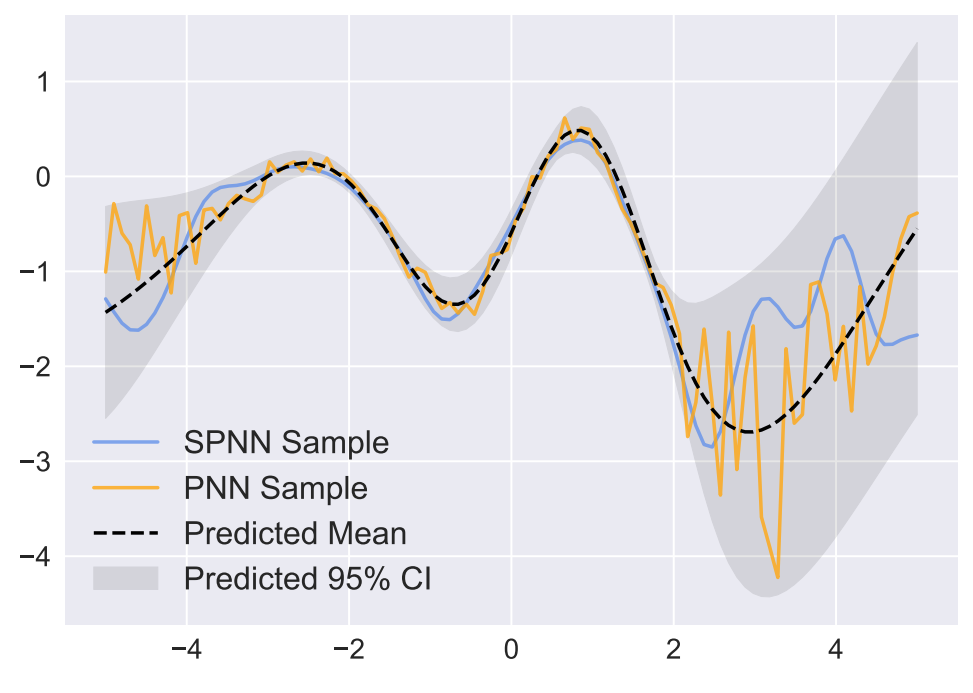

Youngseog Chung, Willie Neiswanger Ian Char, Jeff Schneider NeurIPS 2021 We propose two algorithms to learn the conditional quantiles from data for predictive uncertainty quantification. One algorithm utilizes consistent estimators of the conditional densities. For the second algorithm, we propose a loss function to directly optimize calibration and sharpness. |

|

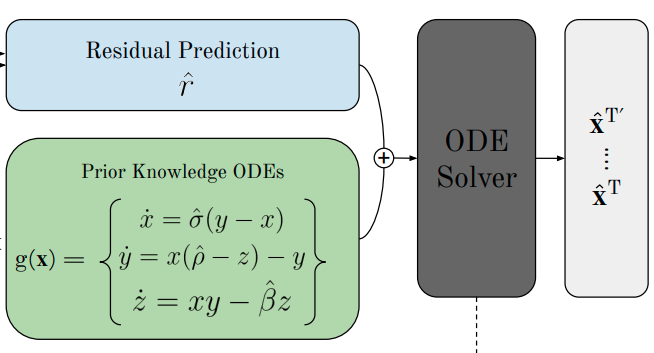

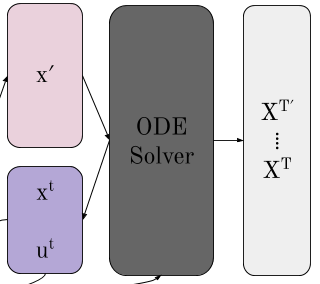

Viraj Mehta, Ian Char, Willie Neiswanger, Youngseog Chung, Andrew Oakleigh Nelson, Mark D Boyer, Egemen Kolemen, Willie Neiswanger, Jeff Schneider IEEE Conference on Decision and Control (CDC) 2021 We introduce an algorithm for modeling dynamical systems which utilizes neural ordinary differential equations (ODE). By utilizing ODE's, we empirically show significant improvement in sample efficiency and parameter shift when learning the dynamics model from data. |

|

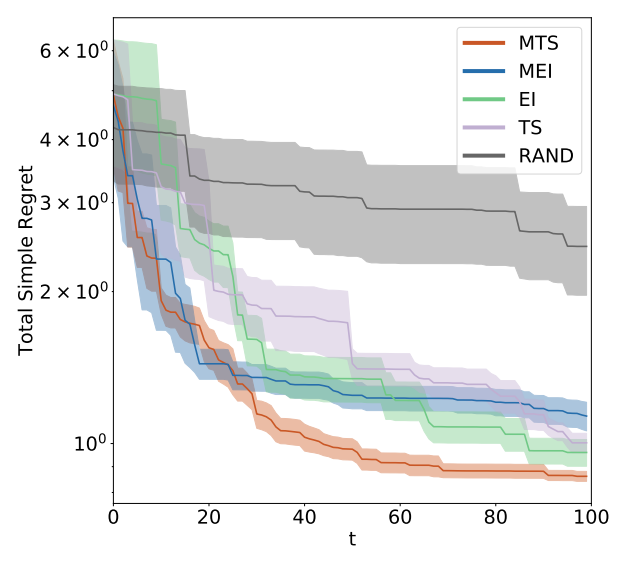

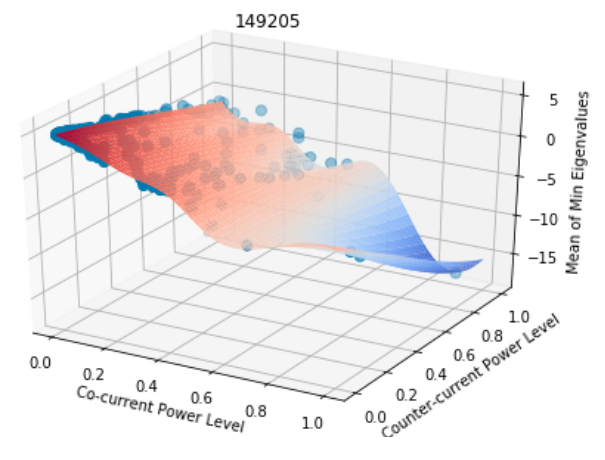

Ian Char, Youngseog Chung, Willie Neiswanger, Kirthevasan Kandasamy, Andrew Oakleigh Nelson, Mark D Boyer, Egemen Kolemen, Jeff Schneider NeurIPS 2019 We propose a contextual Bayesian optimization based on Thompson sampling in the offline setting. The offline setting assumes that the user can actively choose contexts to query, as opposed to the online setting where contexts are chosen by nature. |

|

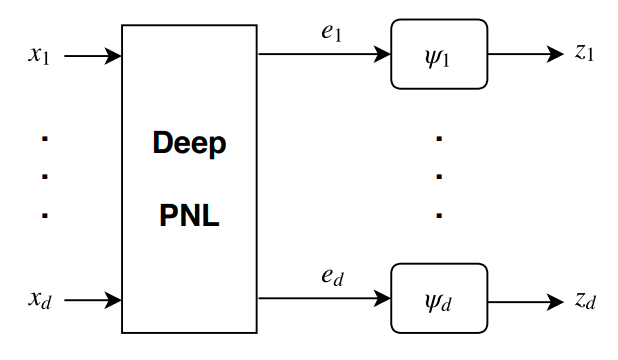

Youngseog Chung, Joon Kim, Tom Yan, Helen Zhou, Preprint 2019 We propose an end-to-end learning procedure with deep neural networks for causal discovery. The algorithm is designed to identify the causal directions between multiple variables which have a post-nonlinear causal relationship. |

|

|

|

Ian Char*, Youngseog Chung*, Rohan Shah, Willie Neiswanger, Jeff Schneider NeurIPS 2023 Workshop on Adaptive Experimental Design and Active Learning in the Real World

|

|

Viraj Mehta, Ian Char, Willie Neiswanger, Youngseog Chung, Andrew Oakleigh Nelson, Mark D Boyer, Egemen Kolemen, Willie Neiswanger, Jeff Schneider ICLR 2020 Integration of Deep Neural Models and Differential Equations Workshop

|

|

Youngseog Chung*, Ian Char*, Willie Neiswanger, Kirthevasan Kandasamy, Andrew Oakleigh Nelson, Mark D Boyer, Egemen Kolemen, Jeff Schneider NeurIPS 2019 Workshop on Machine Learning and the Physical Sciences

|

Website template taken from Jon Barron's website.